Excerpts from Randy George's "Dark Side of DLP"

Randy George wrote a good article for InformationWeek titled The Dark Side of Data Loss Prevention. I thought he made several good points that are worth repeating and expanding.

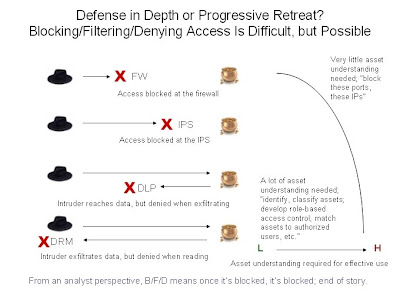

Randy George wrote a good article for InformationWeek titled The Dark Side of Data Loss Prevention. I thought he made several good points that are worth repeating and expanding.[T]here's an ugly truth that DLP vendors don't like to talk about: Managing DLP on a large scale can drag your staff under like a concrete block tied to their ankles.

This is important, and Randy explains why in the rest of the article.

Before you fire off your first scan to see just how much sensitive data is floating around the network, you'll need to create the policies that define appropriate use of corporate information.

This is a huge issue. Who is to say just what activity is "authorized" or "not authorized" (i.e., "business activity" vs "information security incident")? I have seen a wide variety of activities that scream "intrusion!" only to hear, "well, we have a business partner in East Slobovistan who can only accept data sent via netcat in the clear." Notice I also emphasized "who." It's not just enough to recognize badness; someone has to be able to classify badness, with authority.

Once your policies are in order, the next step is data discovery, because to properly protect your data, you must first know where it is.

Good luck with this one. When you solve it at scale, let me know. This is actually the one area where I think "DLP" can really be rebranded as an asset discovery system, where the asset is data. I'd love to have a DLP deployment just to find out what is where and where it goes, under normal conditions, as perceived by the DLP product. That's a start at least, and better than "I think we have a server in East Slobovistan with our data..."

Then there's the issue of accuracy... Be prepared to test the data identification capabilities you've enabled. The last thing you want is to wade through a boatload of false-positive alerts every morning because of a paranoid signature set. You also want to make sure that critical information isn't flying right past your DLP scanners because of a lax signature set.

False positives? Signature sets? What is this, dead technology? That's right. Let's say your DLP product runs passively in alert-only mode. How do you know if you can trust it? That might require access to the original data or action to evaluate how and why the DLP product came to the alert-worthy conclusion that it did.

Paradoxically, if the DLP product is in active blocking mode, your analysts have an easier time separating true problems from false problems. If active DLP blocks something important, the user is likely to complain to the help desk. At least you can figure out what the user did that upset both DLP and the denied user.

However, as with intrusion-detection systems, not all actions can be automated, and network-based DLP will generate events that must be investigated and adjudicated by humans. The more aggressively you set your protection parameters, the more time administrators will spend reviewing events to decide which communications can proceed and which should be blocked.

Ah, we see the dead technology -- IDS -- mentioned explicitly. Let's face it -- running any passive alerting technology, and making good sense of the output, requires giving the analyst enough data to make a decision. This is the core of NSM philosophy, and why NSM advocates collecting a wide variety of data to support analysis.

For earlier DLP comments, please see Data Leakage Protection Thoughts from last year.

Comments

what is "the mind of a dlp engine," Alex? or baobao mining google adwords into a romance novel.