Notes on Self-Publishing a Book

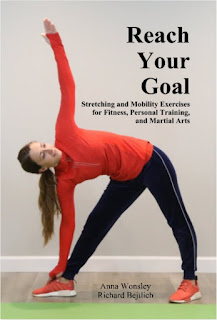

In this post I would like to share a few thoughts on self-publishing a book , in case anyone is considering that option. As I mentioned in my post on burnout , one of my goals was to publish a book on a subject other than cyber security. A friend from my Krav Maga school, Anna Wonsley , learned that I had published several books , and asked if we might collaborate on a book about stretching. The timing was right, so I agreed. I published my first book with Pearson and Addison-Wesley in 2004, and my last with No Starch in 2013. 14 years is an eternity in the publishing world, and even in the last 5 years the economics and structure of book publishing have changed quite a bit. To better understand the changes, I had dinner with one of the finest technical authors around, Michael W. Lucas . We met prior to my interest in this book, because I had wondered about publishing books on my own. MWL started in traditional publishing like me, but has since become a full-time ...